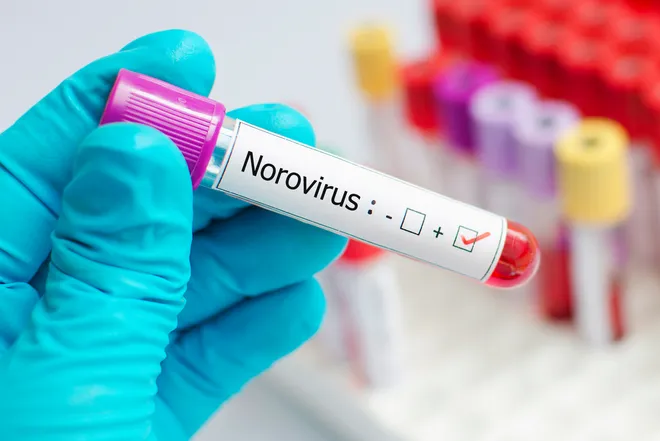

In recent years, cyber fraud has evolved dramatically, from simple email deceptions to sophisticated GenAI technologies to create deepfakes that can mimic the real thing’s face, body movement, voice, and accents. Lawsuits are being filed worldwide to block or bring down these deepfakes under copyright infringement laws. However, simply using the courts to prevent this misuse is insufficient; the state must also enforce policy at the AI level.

A multinational company’s CFO was recently impersonated via video call using deepfake technology. This AI-generated facade was used to authorize the transfer of a significant sum, nearly $25 million, into several local bank accounts. Initially skeptical, the employee was convinced after a video chat with what appeared to be her CFO. Since such reactions are generally post facto, the harms caused by these deepfakes on reputational, financial, or societal levels have already been committed; they don’t prevent or reduce the number of deepfakes. Nor is there any deterrence to the AI platforms used as tools to create these deepfakes. Most infringements are limited to taking these deepfakes down on social media.

However, generative AI (Gen AI) dramatically alters the landscape, bypassing human intervention and producing works almost entirely by software the deepfake. These convincing falsifications are powered by advanced AI tools, many of which are available on the Internet and combine machine learning and neural networks.

Also Read:

Choosing the Best Smartwatch for Your Health and Fitness:

EPF Pension Benefits: Eligibility, Amount of Pension, and Other Information